Transformer Neural Network Python

Transformer with Python and TensorFlow 20 Encoder Decoder. This transforms the batch_y tensor from size batch_size 1 to batch_size 10.

List Of Transformer Tutorials For Deep Learning Machinecurve

3x1 3x1 scalar a npdot weightVectorT input b z activationFunc a return z.

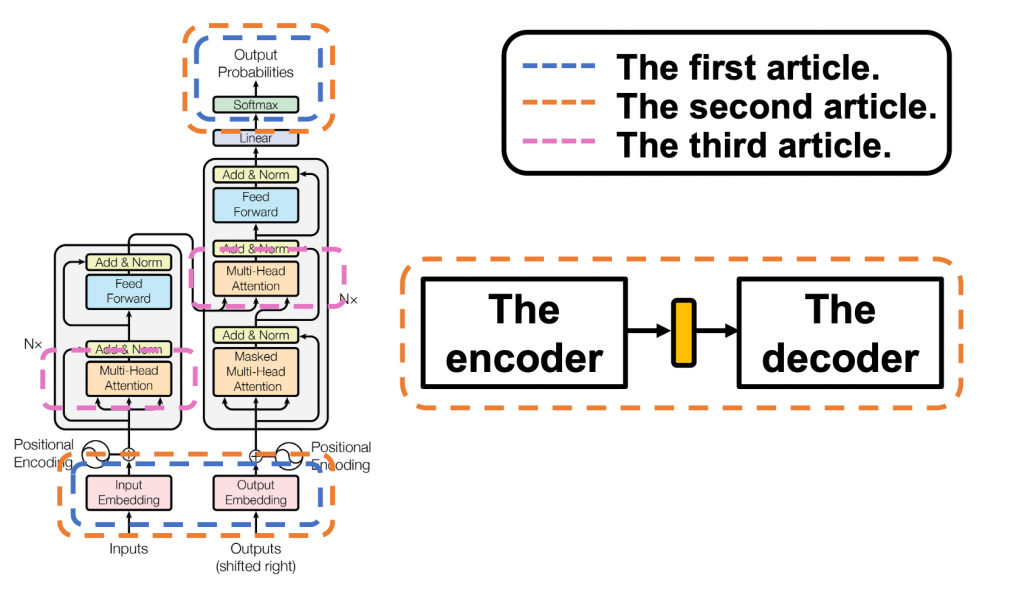

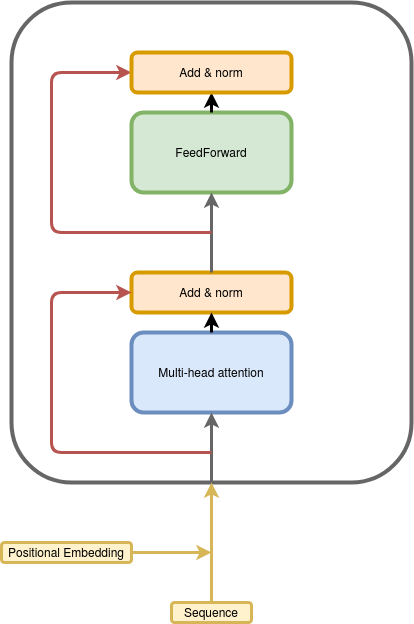

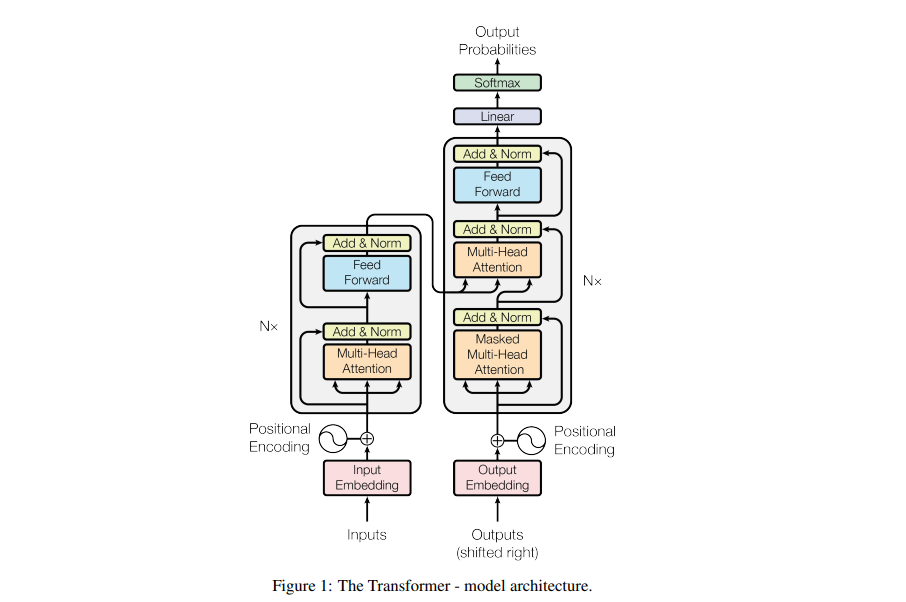

Transformer neural network python. We can code a neuron in Python as follow. A transformer is a deep learning model that adopts the mechanism of attention differentially weighing the significance of each part of the input dataIt is used primarily in the field of natural language processing NLP and in computer vision CV. So think about whether this is worthwhile for you want to accomplish.

Starting to Build Your First Neural Network The first step in building a neural network is generating an output from input data. A recurrent attention module consisting of an LSTM cell which can query its own past cell states by the means of windowed multi-head attention. Dtdzung July 17 2017 at 102 pm.

Graph Transformer Networks. Pip install neurobiba See examples. Very easy to use.

This is a great article and great code so I added the link to the collection of neural networks with python. Like recurrent neural networks RNNs transformers are designed to handle sequential input data such as natural language for tasks such as. Asking uetions are really fastdious thing if you are not.

The LARNN cell with attention can be easily used inside a loop on the cell state just like any other RNN. Xxx July 21 2017 at 1021 am. As others have mentioned a neural network trained to do the discrete Fourier transform DFT will likely work out to be an imperfect approximation of the Fourier transform and much slower than a good Fast Fourier Transform implementation.

Youll do that by creating a weighted sum of the variables. In one of the previous articles we kicked off the Transformer architecture. This repository is the implementation of Graph Transformer NetworksGTN.

The above figure illustrates the two transformations that a neuron performs. The next line is important. The formulas are derived from the BN-LSTM and the Transformer Network.

They are relying on the same principles like Recurrent Neural Networks and LSTM s but are trying to overcome their shortcomings. Small collection of functions for neural networks. Kim Graph Transformer Networks In Advances in Neural Information Processing Systems NeurIPS 2019.

Take your NLP knowledge to the next level and become an AIlanguage understanding expert by mastering the quantum leap ofTransformer neural network modelsKey FeaturesBuild and implement state-of-the-art language models such asthe original Transformer BERT T5 and GPT-2 using concepts thatoutperform classical deep learning modelsGo through hands-on applications in Python using GoogleColaboratory Notebooks with nothing to install on a localmachineTest transformer. This function returns the calculated output of the neural network. Python - sudden drop in accuracy while training transformer neural network with 6 layers 512 embedding but model trains very well with 4 layers 128 embedding - Stack Overflow.

The feedFwd function calculates a single forward pass for the neural network. Transformer is a huge system with many different parts. Seongjun Yun Minbyul Jeong Raehyun Kim Jaewoo Kang Hyunwoo J.

Weights Weights2 1 2 input neurons and 1 output create data create answer train. Please subscribe to keep me alive. Pseudo Code def neuron input weightVector bias.

The first thing youll need to do is represent the inputs with Python and NumPy.

What Is A Transformer An Introduction To Transformers And By Maxime Inside Machine Learning Medium

How To Code The Transformer In Pytorch By Samuel Lynn Evans Towards Data Science

10 7 Transformer Dive Into Deep Learning 0 17 0 Documentation

Transformer Xl Explained Combining Transformers And Rnns Into A State Of The Art Language Model By Rani Horev Towards Data Science

Transformer As A Graph Neural Network Dgl 0 6 1 Documentation

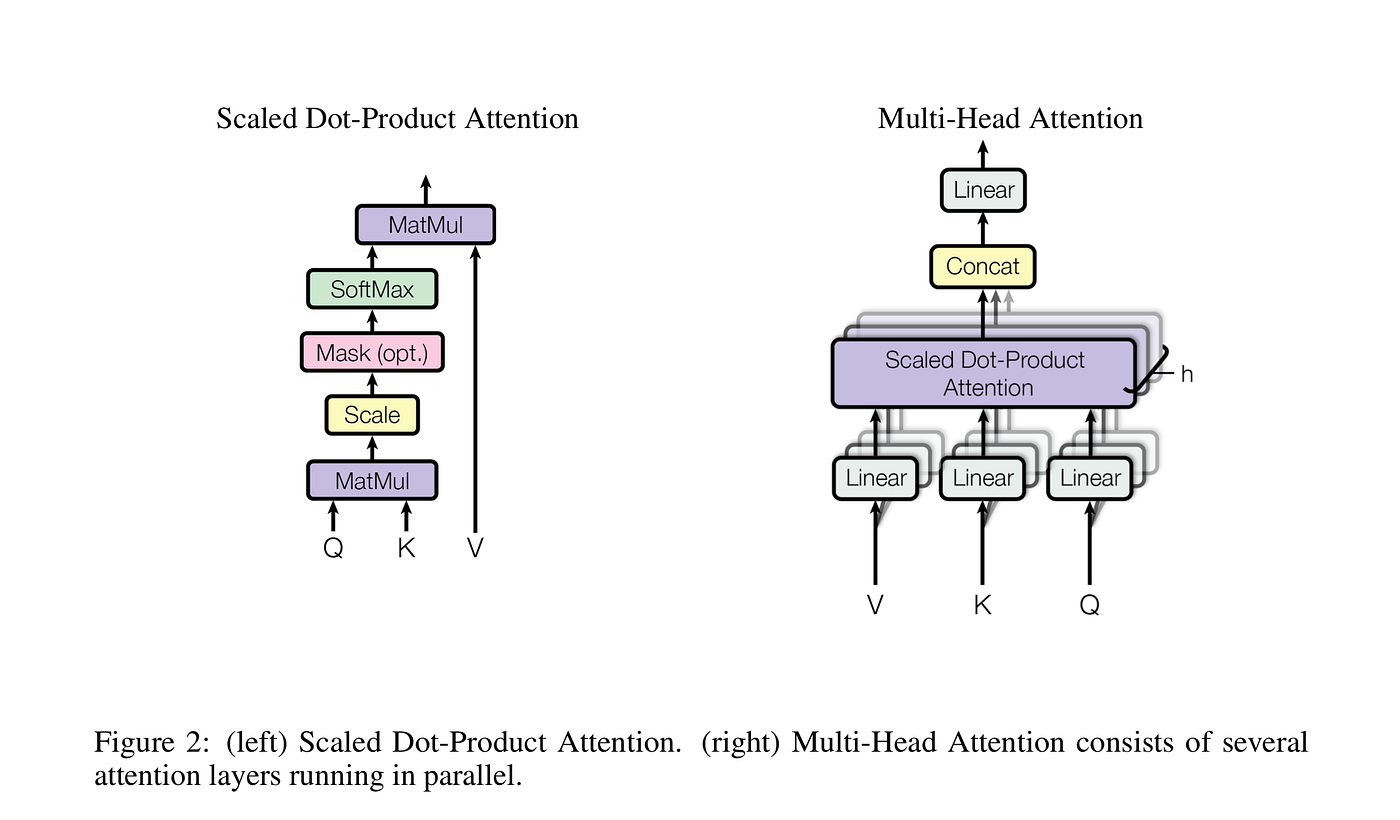

Transformer Attention Is All You Need By Pranay Dugar Towards Data Science

Github Lilianweng Transformer Tensorflow Implementation Of Transformer Model In Tensorflow

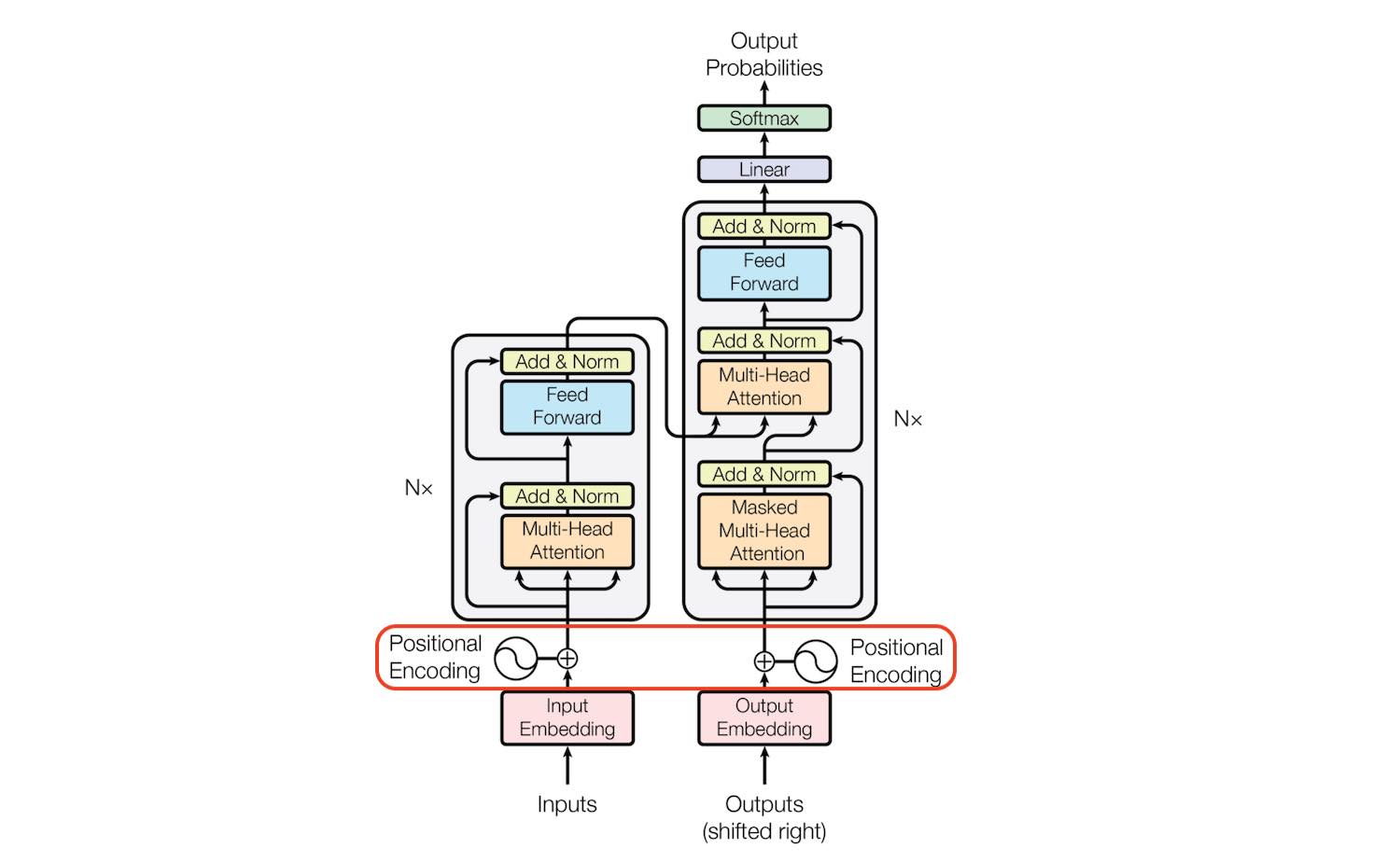

Transformer Architecture The Positional Encoding Amirhossein Kazemnejad S Blog

Transformer With Python And Tensorflow 2 0 Encoder Decoder

How To Code The Transformer In Pytorch By Samuel Lynn Evans Towards Data Science

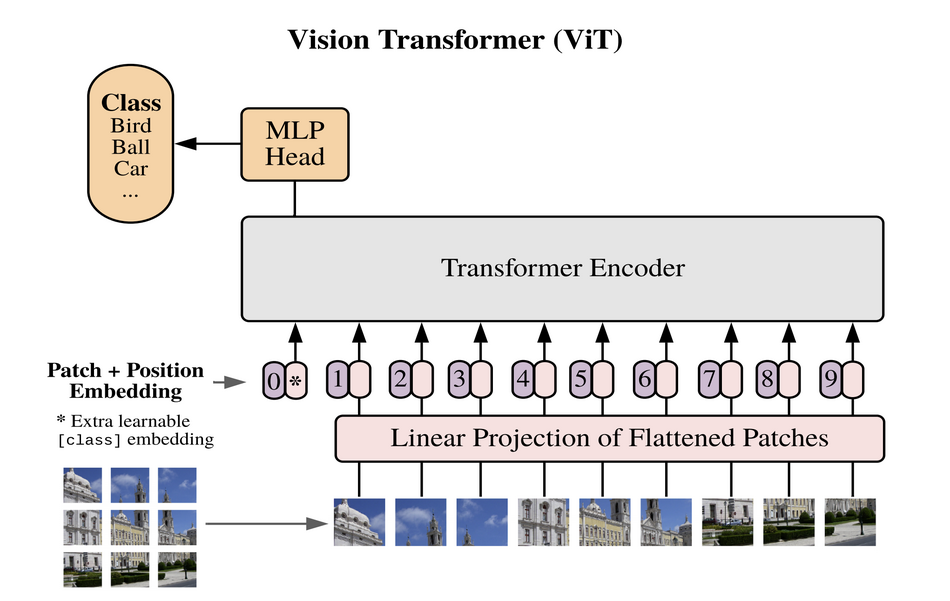

Vision Transformers Transformers Work Well In Computer Vision Too

Transformer As A Graph Neural Network Dgl 0 6 1 Documentation

Nlp Model Building Transformers Attention More Kaggle

Positional Encoding Residual Connections Padding Masks Covering The Rest Of Transformer Components Data Science Blog

Transformer Architecture Self Attention Kaggle

Music Genre Classification Transformers Vs Recurrent Neural Networks By Youness Mansar Towards Data Science

Introduction To Transformers In Machine Learning Machinecurve

Research Guide For Transformers Nearly Everything You Need To Know In By Derrick Mwiti Heartbeat

Amazon Com Transformers For Natural Language Processing Build Innovative Deep Neural Network Architectures For Nlp With Python Pytorch Tensorflow Bert Roberta And More Ebook Rothman Denis Kindle Store

0 Response to "Transformer Neural Network Python"

Posting Komentar