Pytorch Transformer Decoder Beam Search

PyTorch implementation of beam search decoding for seq2seq models Topics search natural-language-processing beam decoding torch pytorch greedy seq2seq neural. Ctc Beam Search Decoder Pytorch.

Pytorch transformer decoder beam search. The problem of decoding on text generation problems. Do_early_stopping objbool optional defaults to objFalse. Posted on July 31 2020 by Sandra.

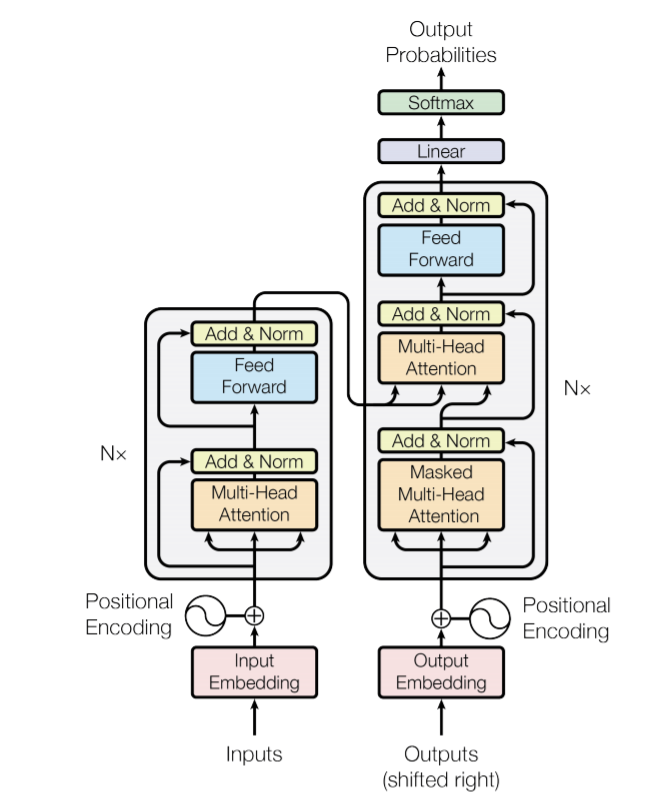

Adding another loop for batch size would make the algorithm O n4. TransformerDecoder class torchnnTransformerDecoder decoder_layer num_layers normNone source. Decoder_layer an instance of the TransformerDecoderLayer class required.

I implemented the below based off of a greedy decoding method which I am trying to replace. This is the function that I am using to decode the output probabilities.

Runpy trains a translation model de - en. Now you can see this function is implemented with batch_size 1 in mind. Before a formal introduction to greedy search let us formalize the.

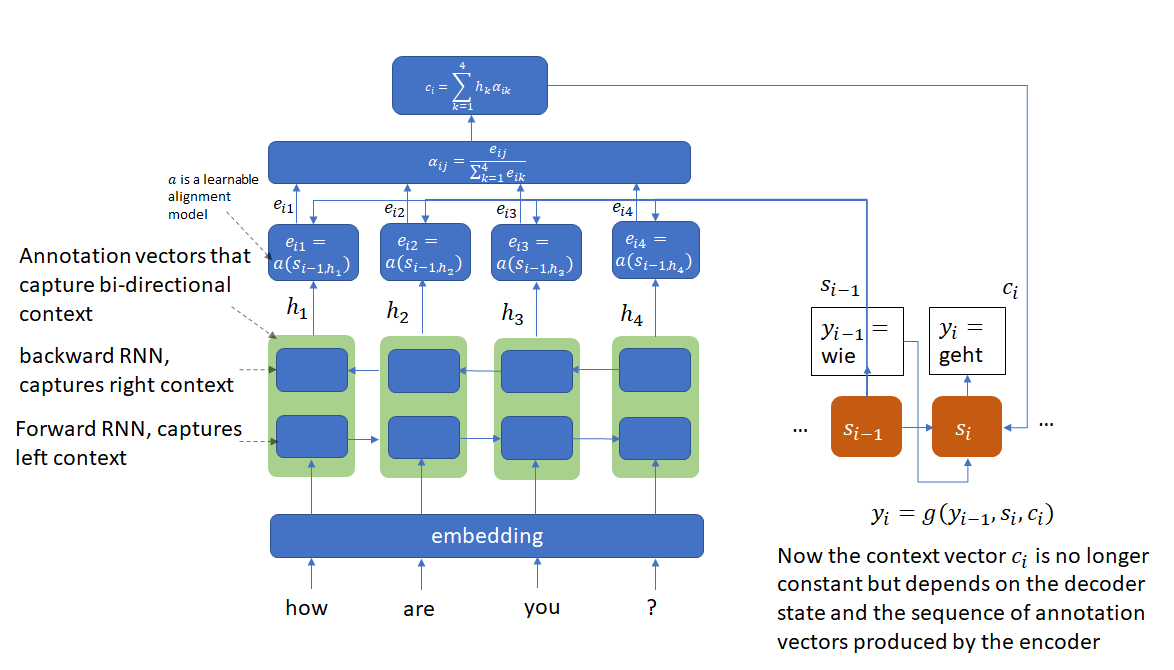

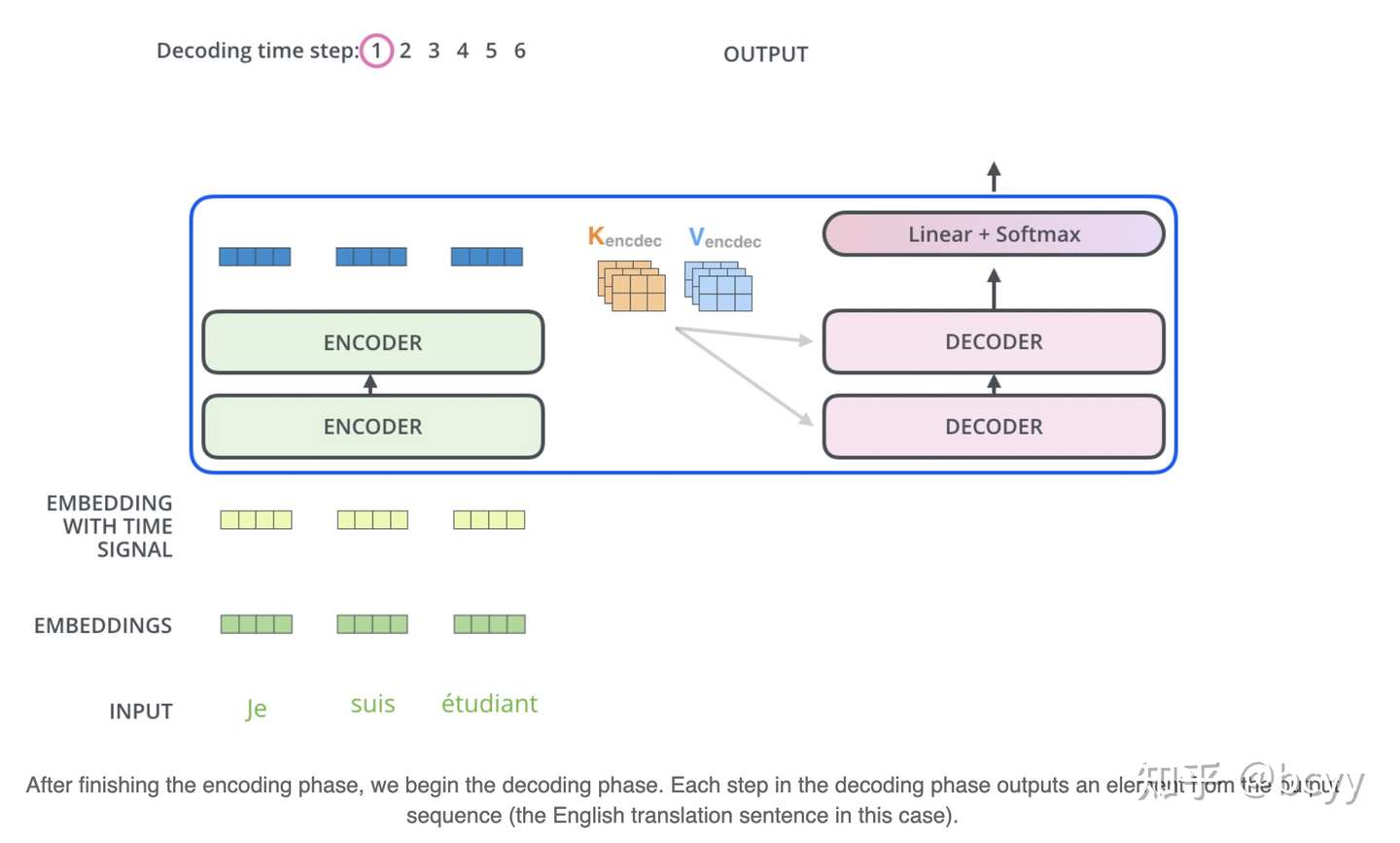

Whether to stop the beam search when at least num_beams sentences are finished per batch or not. A beam search decoder for phrase-based statistical machine translation models 2004. The decoder is not recurrent its self-attentive but it is still auto-regressive ie generating a token is conditioned on previously generated tokens.

The original model was not implemented by me. Im losing my head over implementing beam search for Transformer decoding in PyTorch. This is a sample code of beam search decoding for pytorch.

Num_layers the number of sub-decoder-layers in the decoder required. Batch-wise beam search in pytorch. Set to values 10 in order to encourage the model to generate shorter sequences to a value 10 in order to encourage the model to produce longer sequences.

There are two beam search implementations. Although this implementation is slow this may help your understanding for its simplicity. Make pytorch transformer twice as fast open ended text generation beam searchtorch neural hine translation top beam search 1library Beam Search Attention For Text Summarization Made Easy Tutorial 5 Er.

Im trying to implement a beam search decoding strategy in a text generation model. The beam search works exactly in the same as with the recurrent models. I understand the idea but I am not sure how to scale it to a situation with several examples at once.

Datasetspy has code for loading and processing data. Trainerpy has code for training model. Transformer self attention pytorch beam search decoding using manner ctc decoding github githubharald ctcwordbeamsearch sch recognition papers with code.

Norm the layer normalization component optional. Beampy contains beam search. In Section 97 we predicted the output sequence token by token until the special end-of-sequence token is predictedIn this section we will begin with formalizing this greedy search strategy and exploring issues with it then compare this strategy with other alternatives.

Awesome Open Source is not affiliated with the legal entity who owns the Budzianowski organization. Metricspy contains accuracy metric. Pytorch Beam Search Decoding and other potentially trademarked words copyrighted images and copyrighted readme contents likely belong to the legal entity who owns the Budzianowski organization.

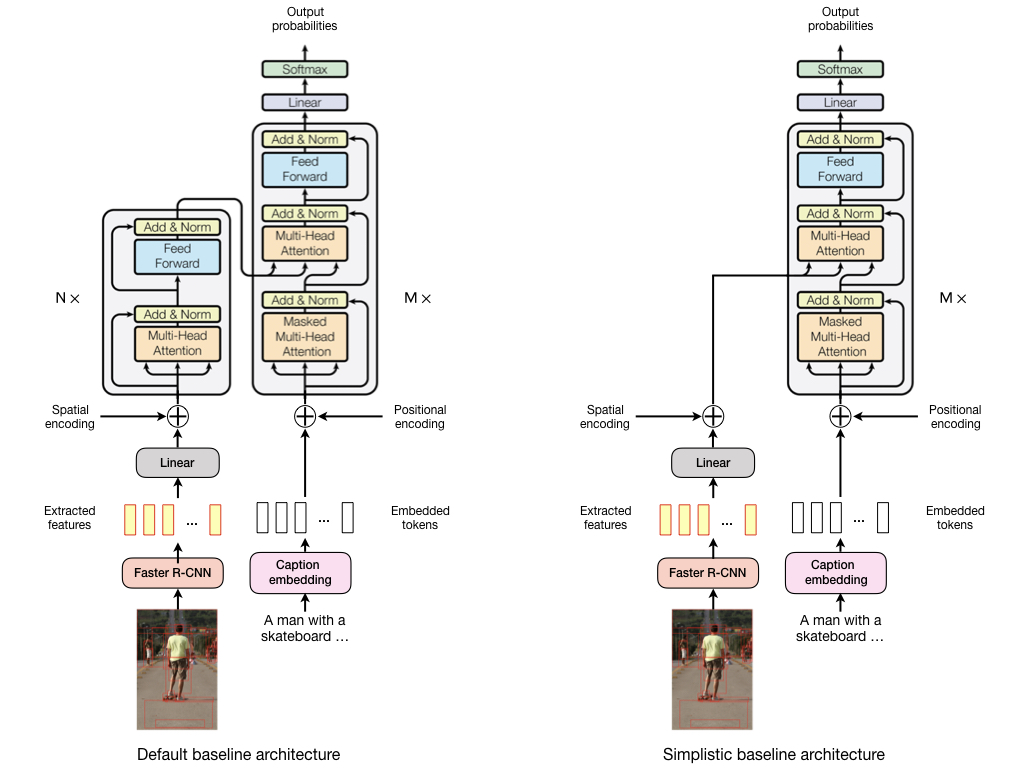

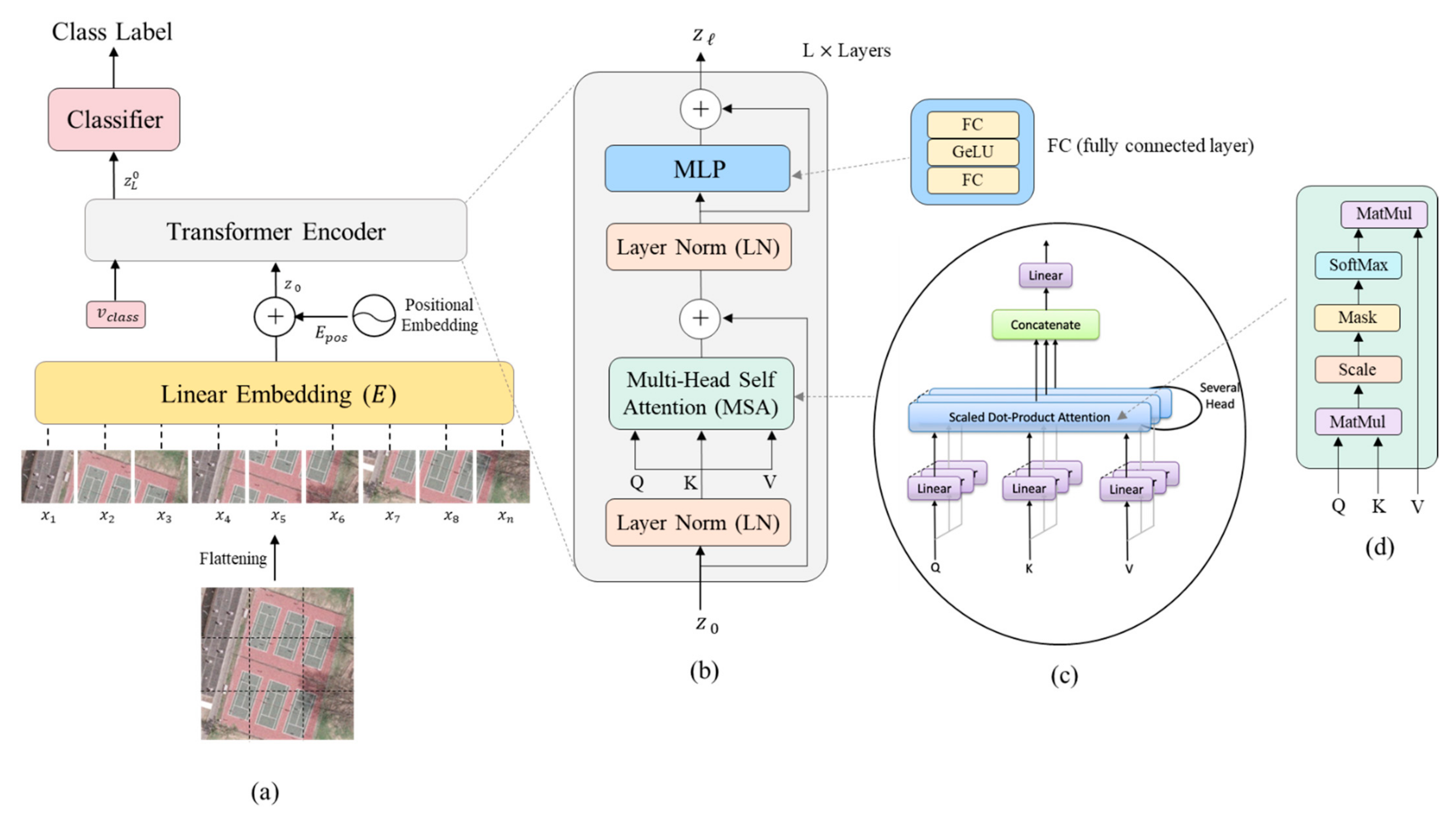

Modelspy includes Transformers encoder decoder and multi-head attention. Batch_beam_search_decoding decodes sentences as a batch. The Transformer introduced in the paper Attention Is All You Need is a powerful sequence-to-sequence modeling architecture capable of producing state-of-the-art neural machine translation NMT systems.

In this tutorial you discovered the greedy search and beam search decoding algorithms that can be used on text generation problems. Recently the fairseq team has explored large-scale semi-supervised training of Transformers using back-translated data further improving translation quality over the. Exhaustive search and beam search.

Beam_search_decoding decodes sentence by sentence. TransformerDecoder is a stack of N decoder layers.

Understanding Incremental Decoding In Fairseq Telesens

Remote Sensing Free Full Text Vision Transformers For Remote Sensing Image Classification Html

Comparison Between Bert Gpt 2 And Elmo Sentiment Analysis Nlp Syntax

Transformer Decoder Outputs Nlp Pytorch Forums

Github Sooftware Speech Transformer Transformer Framework Speciaized In Speech Recognition Tasks Using Pytorch

Making Pytorch Transformer Twice As Fast On Sequence Generation Scale

Github Dreamgonfly Transformer Pytorch A Pytorch Implementation Of Transformer In Attention Is All You Need

10 7 Transformer Dive Into Deep Learning 0 17 0 Documentation

Transformer 1 2 Pytorch S Nn Transformer

Making Pytorch Transformer Twice As Fast On Sequence Generation Scale

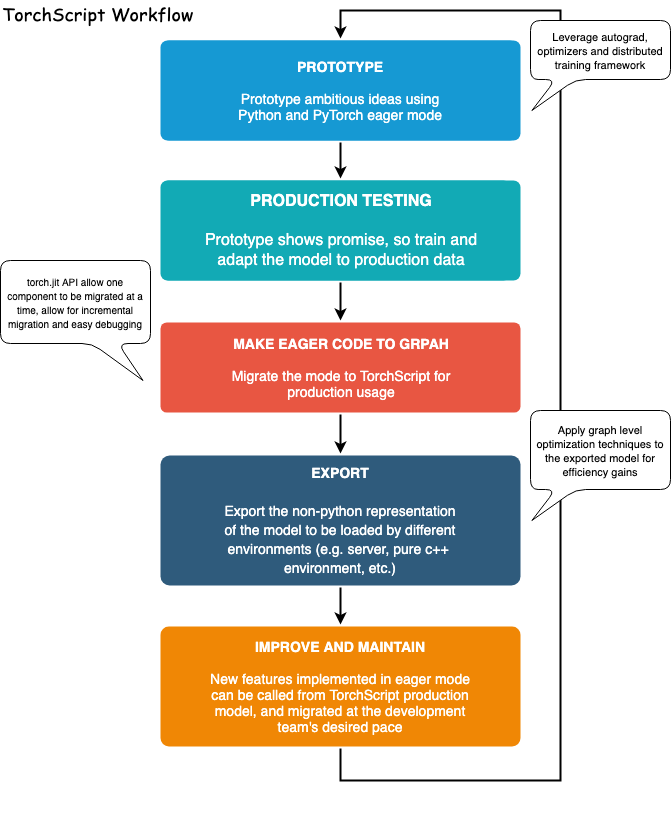

Deploying A Seq2seq Model With Torchscript Pytorch Tutorials 1 9 0 Cu102 Documentation

Natural Language Processing Nlp Don T Reinvent The Wheel Nlp Natural Language Sentiment Analysis

Transformer Architecture Self Attention Kaggle

Github Dreamgonfly Transformer Pytorch A Pytorch Implementation Of Transformer In Attention Is All You Need

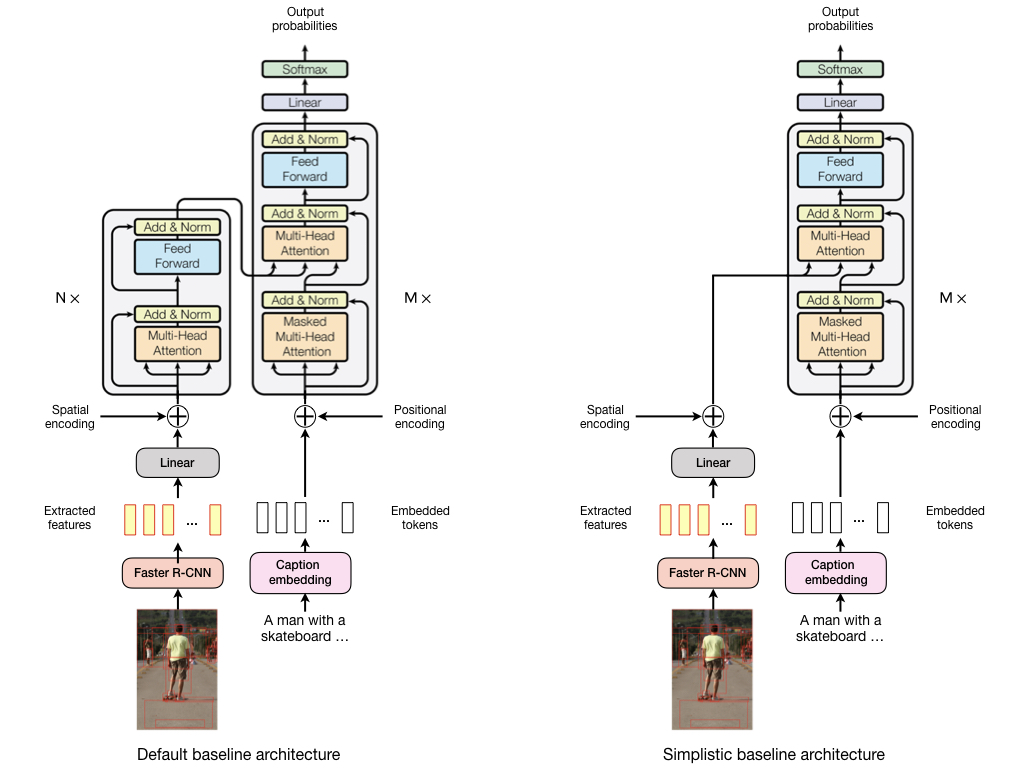

Transformer Based Image Captioning Extension Of Pytorch Fairseq

Deploying A Seq2seq Model With Torchscript Pytorch Tutorials 1 9 0 Cu102 Documentation

A Visual Guide To Using Bert For The First Time Jay Alammar Visualizing Machine Learning One Concept A Machine Learning Models Deep Learning Some Sentences

0 Response to "Pytorch Transformer Decoder Beam Search"

Posting Komentar